Self-consistency cycle: Difference between revisions

Vaspmaster (talk | contribs) No edit summary |

Vaspmaster (talk | contribs) No edit summary |

||

| Line 5: | Line 5: | ||

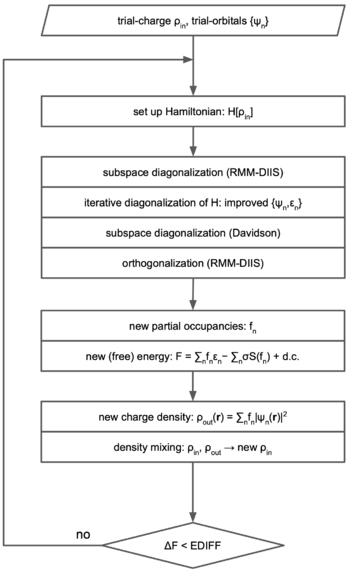

Figure 1. shows a procedural flowchart of the self-consistency cycle: | Figure 1. shows a procedural flowchart of the self-consistency cycle: | ||

# | # When starting from scratch, the SCC starts with an initial guess for the electronic density of the system under consideration: {{VASP}} uses the approximation of overlapping atomic charge densities. The orbitals are initialized with random numbers. Alternatively, the SCC may (re-)start from the orbitals and/or electronic density obtained in a previous calculation. | ||

# The density defines the Hamiltonian. | # The density defines the Hamiltonian. | ||

# By means of iterative matrix diagonalization techniques one obtains the {{TAG|NBANDS}} lowest lying eigenstates of the Hamiltonian. The iterative matrix diagonalization algorithms implemented in {{VASP}} are the [[blocked-Davidson|blocked-Davidson algorithm]] and the [[RMM-DIIS|Residual Minimization Method with Direct Inversion in the Iterative Subspace (RMM-DIIS)]]. Per default {{VASP}} uses the blocked-Davidson algorithm ({{TAG|ALGO}} = Normal). | # By means of iterative matrix diagonalization techniques one obtains the {{TAG|NBANDS}} lowest lying eigenstates of the Hamiltonian. The iterative matrix diagonalization algorithms implemented in {{VASP}} are the [[blocked-Davidson|blocked-Davidson algorithm]] and the [[RMM-DIIS|Residual Minimization Method with Direct Inversion in the Iterative Subspace (RMM-DIIS)]]. Per default {{VASP}} uses the blocked-Davidson algorithm ({{TAG|ALGO}} = Normal). | ||

| Line 18: | Line 18: | ||

This ensures that the wave functions that are initialized with random numbers have converged to a something sensible before they are used to construct a new charge density. | This ensures that the wave functions that are initialized with random numbers have converged to a something sensible before they are used to construct a new charge density. | ||

For (a lot) more details on the self-consistency cycle and associated algorithms in {{VASP}}, we recommend the seminal papers by Kresse and Furthmueller | |||

---- | ---- | ||

Revision as of 15:10, 19 October 2023

The term "Self-Consistency Cycle" (SCC) denotes a category of algorithms that determine the electronic ground state by a combination of iterative matrix diagonalization and density mixing.

Figure 1. shows a procedural flowchart of the self-consistency cycle:

- When starting from scratch, the SCC starts with an initial guess for the electronic density of the system under consideration: VASP uses the approximation of overlapping atomic charge densities. The orbitals are initialized with random numbers. Alternatively, the SCC may (re-)start from the orbitals and/or electronic density obtained in a previous calculation.

- The density defines the Hamiltonian.

- By means of iterative matrix diagonalization techniques one obtains the NBANDS lowest lying eigenstates of the Hamiltonian. The iterative matrix diagonalization algorithms implemented in VASP are the blocked-Davidson algorithm and the Residual Minimization Method with Direct Inversion in the Iterative Subspace (RMM-DIIS). Per default VASP uses the blocked-Davidson algorithm (ALGO = Normal).

- After the eigenstates and eigenvalues of the Hamiltonian (i.e., orbitals and one-electron energies) have been determined with sufficient accuracy, the corresponding partial occupancies of the orbitals are calculated.

- From the one-electron energies and partial occupancies the free energy of the system is computed.

- From the orbitals and partial occupancies a new electronic density is constructed.

- In principle, the new density could be directly used to define a new Hamiltonian. In most cases, however, this does not lead to a stable algorithm (on account of, e.g. charge sloshing). Instead, the new density is not used as is, but is mixed with the old density. By default VASP uses a Broyden mixer. The resulting density then defines the new Hamiltonian for the next round of iterative metrix diagonalization.

Steps 2-7 are repeated until the change in the free energy from one cycle to the next drops below a specific threshold (EDIFF).

Note that when starting from scratch (ISTART = 0), the self-consistency cycle procedure of VASP always begins with several (NELMDL) cycles where the density is kept fixed at the initial approximation (overlapping atomic charge densities). This ensures that the wave functions that are initialized with random numbers have converged to a something sensible before they are used to construct a new charge density.

For (a lot) more details on the self-consistency cycle and associated algorithms in VASP, we recommend the seminal papers by Kresse and Furthmueller

The following section discusses the minimization algorithms implemented in VASP. We generally have one outer loop in which the charge density is optimized, and one inner loop in which the wavefunctions are optimized.

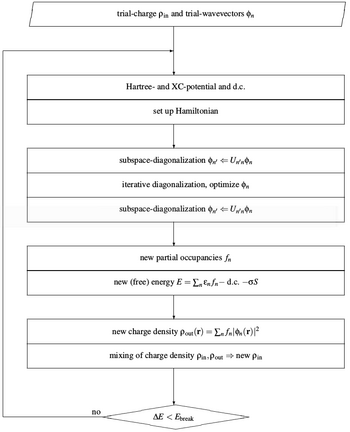

Most of the algorithms implemented in VASP use an iterative matrix-diagonalization scheme, where the used algorithms are based on the conjugate gradient scheme [1][2], block Davidson scheme [3][4], or a residual minimization scheme -- direct inversion in the iterative subspace (RMM-DIIS) [5][6]. For the mixing of the charge density an efficient Broyden/Pulay mixing scheme [6][7][8] is used. Fig. 1 shows a typical flow-chart of VASP. Input charge density and wavefunctions are independent quantities (at start-up these quantities are set according to INIWAV and ICHARG). Within each selfconsistency loop, the charge density is used to set up the Hamiltonian, then the wavefunctions are optimized iteratively so that they get closer to the exact wavefunctions of this Hamiltonian. From the optimized wavefunctions, a new charge density is calculated, which is then mixed with the old input-charge density.

The conjugate gradient and the residual minimization scheme do not recalculate

the exact Kohn-Sham eigenfunctions but an arbitrary linear combination

of the NBANDS lowest eigenfunctions. Therefore it is in addition necessary to

diagonalize the Hamiltonian in the subspace spanned by the

trial-wavefunctions, and to transform the wavefunctions accordingly

(i.e. perform a unitary transformation of the wavefunctions,

so that the Hamiltonian is diagonal in the subspace spanned by transformed wavefunctions).

This step is usually called sub-space diagonalization (although a more

appropriate name would be, using the Rayleigh-Ritz variational scheme in

a subspace spanned by the wavefunctions):

The sub-space diagonalization can be performed before or after the conjugate gradient or residual minimization scheme. Tests we have done indicate that the first choice is preferable during self-consistent calculations.

In general, all iterative algorithms work very similarly. The core quantity is the residual vector

This residual vector is added to the wavefunction , the algorithms differ in the way this is exactly done.

References

- ↑ M. P. Teter, M. C. Payne, and D. C. Allan, Phys. Rev. B 40, 12255 (1989).

- ↑ D. M. Bylander, L. Kleinman, and S. Lee, Phys Rev. B 42, 1394 (1990).

- ↑ R. Davidson, Methods in Computational Molecular Physics edited by G.H.F. Diercksen, and S. Wilson Vol. 113 NATO Advanced Study Institute, Series C (Plenum, New York, 1983), p. 95.

- ↑ B. Liu, in Report on Workshop "Numerical Algorithms in Chemistry: Algebraic Methods" edited by C. Moler and I. Shavitt, (Lawrence Berkley Lab. Univ. of California), (1978), p.49.

- ↑ D. M. Wood and A. Zunger, J. Phys. A 18, 1343 (1985).

- ↑ a b P. Pulay, Chem. Phys. Lett. 73, 393 (1980).

- ↑ S. Blügel, PhD Thesis, RWTH Aachen (1988).

- ↑ D. D. Johnson, Phys. Rev. B 38, 12807 (1988)